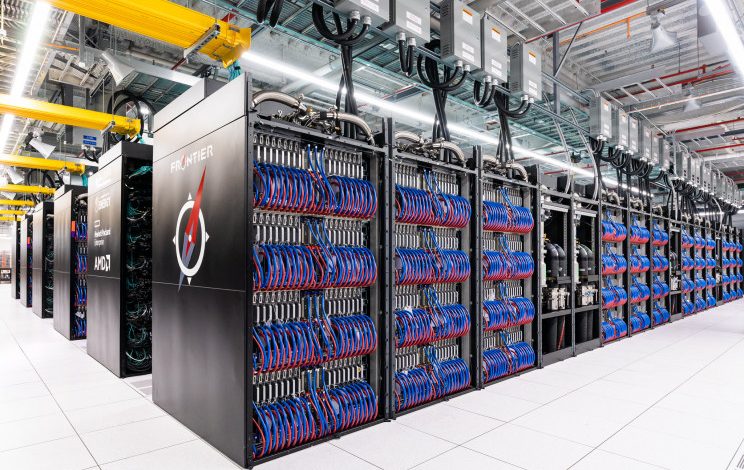

The world’s fastest supercomputer — called Frontier — is whizzing away in a national lab located in the foothills of East Tennessee. It’s the only machine in the world that has ever demonstrated that it could perform more than one quintillion (that’s 1,000,000,000,000,000,000) calculations per second. In the language of computer science, that’s called an exaflop.

Frontier’s project leader, Justin Whitt, told IE that his favorite way to explain the scale of his team’s achievement is to imagine if every single person on Earth could pull out a pen and paper and do one simple math problem per second. If all 7.8 billion of us worked day and night, it would take four years to do the amount of math that Frontier can do in a single second.

Whitt started his career as a fluid dynamicist: “My primary interest in supercomputers was using them to solve hard fluid dynamics problems to design things like aircraft and wind turbines.” He arrived at Oak Ridge National Lab in 2009 to work on Kraken, which was at one point the world’s third-fastest supercomputer. In 2018, he was tapped to lead the Frontier project.

Interesting Engineering recently sat down with Whitt to learn more about Frontier and get the story behind the fastest computer on the planet.

This interview has been edited for length and clarity.

IE: Is a supercomputer just a really big computer? Where are the distinctive attributes that make a supercomputer qualitatively different?

Whitt: In some ways, yes, supercomputers are very similar to normal computers, like your desktop or laptop. It has a lot of the same components or similar ones.

A supercomputer has many, many central processing units and many, many graphical processing units. With Frontier, we segment that system up into nodes. There are about 9,400 nodes in the Frontier system. Each of those nodes has a central processing unit (a CPU) and four graphical processing units (those are GPUs). So already, that node is a lot more powerful than your typical laptop or desktop.

But then the differences really started to compound because each of those nodes — each component on that board — has to be able to talk to every other component in the system because basically, you’re writing software that goes out and leverages all this hardware at once to do its calculations. So, to keep things in sync, each of those components has to talk to every other component in the system. They’re all connected up with a high-speed network. That’s something your typical computer doesn’t have.

When you think of computers, normally you think, “well, it’s got a hard drive.” Our computer doesn’t have a hard drive. All the data is written out to a separate high-speed storage system that’s also connected over that high-speed network.

IE: What’s the connection between the simple mathematical equations the machine does so rapidly and the programs that it runs for scientists?

Whitt: Regardless of the application — if you are talking about modeling and simulation of different physical phenomena, or you’re talking about different techniques for machine learning or for AI — most of these programs involve multiplying matrices, particularly on the modeling and simulation side.

So basically, you’re modeling and simulating systems of equations. In a computer, you’re representing those as these very large matrices. When you solve matrices, you ended up having to multiply one matrix by another. A lot of the work on the system, these operations per second, are going through and multiplying one matrix by another. We live and breathe linear algebra.

IE: How did Frontier get its start?

Whitt: Department of Energy computing projects start with establishing that there’s a need for a new computer. So, you look at what the scientists and engineers are doing on the current systems. It’s a very formal process where the DOE writes a mission statement that said “in this timeframe, we need this many and this much more computing power.”

That happened back in 2018. From there, you start to define what that looks like and how you meet it. What are all the different ways you can meet that need, and what needs to be done to accomplish that?

IE: Once the goal is set, how do you begin the process of building something that has never existed before?

Whitt: You break it down into a lot of really big steps, and then you break those down into smaller steps. Basically, you’re figuring out what experts you need and what resources you can draw on.

For instance, you might not think that deploying a supercomputer takes a tremendous amount of construction, but with Frontier, it did because meeting that mission required bringing additional power and cooling infrastructure to the data center where the computer would be sitting. So, we brought in an additional 30 megawatts of electrical power, and 40 megawatts of cooling capacity to the building, just to be able to house the Frontier system. All that began later in 2018.

About that same time as the construction activities, we began working with different scientific teams to make sure that their applications — their scientific code or scientific software — was ready to go on the system when it gets here. We put a lot of effort into working with the scientific teams — providing them with technologies along the way that they can port their applications to — to be ready to do real science on the machine on day one of operations. At this point, we’re a little over three years into the project.

IE: What else has your team done to prepare for the launch of Frontier?

Whitt: We’ve been refurbishing the data center. We’ve been hardening our internal infrastructure and getting it ready to connect the computer up when it gets here. And then we’ve been working with the vendor to design and prototype the technologies for the system and test those out along the way.

IE: What role do vendors play in a project like this?

Whitt: These projects are executed normally as public-private partnerships. In this case, after a competitive bid process for building the system, we selected Hewlett Packard Enterprise and their subcontractor Advanced Micro Devices (AMD) to work with. Hewlett Packard Enterprise is responsible for building the computer itself, and AMD is responsible for producing the different processors that go into the computer.

They each have their engineering staffs that are off working on designs and working on prototypes, we have subject material experts on our side that are working in a co-design process with them. Through a process called non-recurring engineering, we’re getting prototypes, we’re testing them, we’re evaluating them and providing feedback and design changes along the way.

IE: What’s it been like for you to watch this project go from paperwork to a hole in the ground to the world’s fastest supercomputer?

Whitt: It’s been awe-inspiring, it’s been incredible to be a part of. It’s the closest thing to a moonshot that I’ve ever been involved with. When we started out, we didn’t have the technologies. We had the goals, and we started building. It’s not clear in the beginning how you get to that endpoint, but it takes a tremendous number of really talented people to pull it off.

I think back to just the bid selection process. We said, based on the mission need, this is the kind of computer we’re building, and these are the kinds of things we need. Vendors from across the U.S. came in and said, “Well, we could build this type of computer, we could build that type of computer.” We then had to evaluate all these highly technical, highly complex bids. For just that meeting alone, we brought in about 150 subject material experts from across the Department of Energy complex — from six other DOE labs — to help us evaluate different parts of the system and make a recommendation on the best path forward. You just, you just don’t have the capability to do that in many other places in the world.

Then from there, each of the vendors has armies of engineers working on different pieces of the system. We have over 100 people at Oak Ridge National Lab that are working on the system. It takes a tremendous amount of effort, and a tremendous number of diverse, talented people to pull these things off. When you sit back and think about it and start to count all the people that have been a part of the system, it really is a breathtaking experience.

IE: How do you manage such a large project with so many people involved?

Whitt: We use a lot of pretty standard systems engineering and project management results. We use Earned Value Management to track the progress on the project, but it really comes down to managing the complexity. There are a lot of different things we do to manage the complexity, like making those investments in maturing technologies and evaluating along the way. That’s a way of buying down our complexity-related risks on the project.

You could sum up a lot of our different methodologies as “fail early.” When you have this much complexity, you have to narrow in on your true path as quickly as possible. So, on every front, you want to push things to fail as early as possible. You want to make those investments and get that technology evaluated to see if it’s sufficient or not as early as you possibly can. And so that’s one of the mantras that we work under: fail early, start early.

IE: Can you say more about that mentality of failing early?

Whitt: When you start out these systems, they’re as sexy as they ever get because they exist only on a PowerPoint slide — and they look fantastic. Many, many components that are used to build this system are serial number one parts. They’re the first parts ever made coming right off the assembly lines from technologies that don’t exist when you start. It’s the same on the software side. A lot of your software technologies don’t exist.

The systems they propose in the beginning are these fantastic things that push all these different boundaries. You never fully realize that when you’re deploying systems of this size and complexity. So, for instance, in our investments, we say, okay, these are the key technologies that have to happen for us to have a system that researchers can use to accomplish their science, and we invest in those technologies. We basically bring them forward as early as possible in time, and we set up evaluations along the way.

So basically, if one of those technologies is going to fail — if it’s not going to work out — then we know as soon as possible, and we have a chance to investigate a different technology or make some kind of contingency plan.

IE: Could you give an example of a piece of hardware in the machine that didn’t exist when you got started?

Whitt: Neither the CPU or the GPUs they’re using in the system existed. Those were planned several years out from actually being fabricated. The high speed network basically has network insertion cards on the board and it has switches in the system. Neither of those things existed. The software for the high-speed network didn’t exist. All these things had to be created as we went.

The cabinets that house all these components didn’t exist. They’re kind of the size of a refrigerator, we call them cabinets, and they’re filled with computer hardware. Those cabinets contain how you distribute the power to all those different computer components. They also contain how you distribute the cooling to those computer components. For us to be able to make computers as dense as we do, you have to cool down at the board level. So, those cabinets themselves are incredibly complex. When we started, those didn’t exist, either. They had to be designed and refined along the way.

IE: This system must produce an enormous amount of heat. Can you explain the cooling system?

Whitt: We’ve got 40 megawatts of cool water that we use to get the heat back out of the system. The system uses about 29 megawatts of electricity. If you use that much power, you have to get that much heat back out, basically. It’s all done with cooling towers, so it makes it a super-efficient data center. We’re projecting that our power use efficiencies will be about 1.03, which will put it with some of the most efficient data centers in the nation. But at this center, you have cooling towers that are attached to massive pumps and massive heat exchangers that circulate about 6,000 to 10,000 gallons of water per minute to the system to get that heat back out.

IE: Now that Frontier is up and running, what kind of science will it be used for?

Whitt: Being a Department of Energy Lab, we of course have a focus on materials and energy. You’ll have a lot of research going on to identify new materials, new power sources, new power storage devices, and those kinds of things.

We also have quite a bit of work in the health area, including a lot of research in drug discovery and treatment efficacy. We do a lot of protein docking and other things that are important for drug discovery on the system, but it’s really a broad array of application areas.

We’ve been working with the goal of having 24 different scientific applications ready to use the system on day one, and those span a really wide array of different science domains.